Using Juju for development

Juju works great for software development involving simple environments and is amazing for complex environments. A recent question on Ask Ubuntu “Is Juju a suitable tool for development as well as deployment?” made me realize that we use Juju for development every day but there really isn’t much documentation on the subject.

For the rest of this post I’m going to assume that you are already familiar with the concept of Juju and what problems it solves on the deployment side of things. If you aren’t, I recommend reading an earlier post of mine “Juju - Explain it to me like I’m 5”.

One of the biggest problems when developing any kind of software is getting the dependencies up and running in a way which matches the production environment close enough to be sure that you aren’t going to run into “this environment only” bugs. Sure you can install mysql onto your local machine and run the database dump on that install, but you also have to make sure that you apply all of the same configuration, indexes, build flags, etc. as the production environment.

Even once you get it up and running you then need the ability to update it after modifications were made by someone else on the project all with high production parity, and without extraneous downtime.

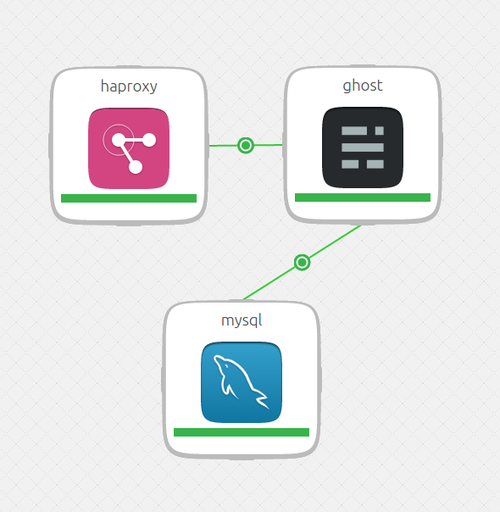

To illustrate the benefits of using Juju for development I’m going to use a fictitious photo and video sharing website. A website like this would require multiple services, load balancer, web server, database, blob store, user authentication, photo processor, video processor.

Keeping in mind that a Juju Charm can be written using any programming language or DSL that can be executed on the host machine. This means it can use Puppet, Chef, Python, JavaScript, Docker, and pretty much anything else you would like to use. Juju provides distinct advantages for project development depending on the lifecycle of the project. For our photo video site let’s first assume that we’re just starting the project and then later on we’ll assume that the project is mature, released, and still under active development.

Just starting out

Typically when a project starts you’re only going to need a couple services, the database and your webserver. Let’s start our environment and install the database and webserver on our local machine.

juju bootstrap local

juju deploy apache2

juju deploy mongodb

Great, It’s 5 minutes in and we now have apache2 and mongodb running on our machine in separate LXC’s, we can now start developing our website and pointing it to these services.

Parallel to this, your teammate is working on the user authentication service, it’s going well and they want someone to help them test it in the application environment. So lets get that service that they have been working on.

mkdir -p ~/charms/trusty && cd ~/charms/trusty

git clone --depth 1 [email protected]:photovideo/authenticator

juju deploy --repository=. local:trusty/authenticator

For more information on deploying local charms see This Post on Ask Ubuntu.

A few minutes later and you have an identical copy to their user authenticator service, you can point your website to it and give it a try. A little later the authenticator service has been updated and you’d like to run it again.

cd ~/charms/trusty/authenticator

git pull

juju upgrade-charm --repository=.

This process repeats itself throughout each service and across each member of your team. Allowing each one to update their dependencies within minutes to identical representations of how it’ll be run in production.

Released project

Now that your project has been released, deployed using Juju, running in production, you’ve had a chance to take advantage of Juju’s deployment and scaling features but how does Juju help you develop now?

In some ways it’s even easier to deploy. In this case, I’m going to assume that your services are private and not stored in the Juju Charm Store. If they were in there you wouldn’t have to first clone the repositories.

mkdir -p ~/charms/trusty && cd ~/charms/trusty

git clone --depth 1 [email protected]:photovideo/mongodb

git clone --depth 1 [email protected]:photovideo/authenticator

…

juju-quickstart -e local photovideo.yaml

Taking advantage of Juju Quickstart and the Juju bundles functionality you can deploy your entire environment with identical services, configuration, and machine placements. This will open up the GUI which will allow you to modify the machine placement of any of those services and change configuration values before deploying to your machine. Once you hit commit, sit back and wait for it to deploy an identical environment to your production environment on your local machine.

Now you can work on the specific service you’re interested in within an identical environment to everyone on your team. And when a service gets updated by another member on your team it’s trivial to update.

cd ~/charms/trusty/authenticator

git pull

juju upgrade-charm --repository=.

I hope this gets you excited to use Juju for development as well as deployment. My team uses Juju for development this way and has for over a year. It allows us to be more productive because we don’t have to waste time installing and updating services the hard way.

I’ll be creating a follow-up to this post with real code examples and workflows for doing the actual development of these services, stay tuned! Thanks for reading, if you have any questions or comments file them below or you can hit me up on twitter @fromanegg.